#!pip install autogluon.multimodal 14wk-62: NLP with Disaster Tweets (Text) / 자료분석(Autogluon)

1. 강의영상

2. Imports

import numpy as np

import pandas as pd

#---#

from autogluon.multimodal import MultiModalPredictor # from autogluon.tabular import TabularPredictor

#---#

import warnings

warnings.filterwarnings('ignore')3. Data

!kaggle competitions download -c nlp-getting-startedWarning: Your Kaggle API key is readable by other users on this system! To fix this, you can run 'chmod 600 /root/.kaggle/kaggle.json'

Downloading nlp-getting-started.zip to /root/Dropbox/MP

100%|████████████████████████████████████████| 593k/593k [00:00<00:00, 1.09MB/s]

100%|████████████████████████████████████████| 593k/593k [00:00<00:00, 1.09MB/s]!unzip nlp-getting-started.zip -d data Archive: nlp-getting-started.zip

inflating: data/sample_submission.csv

inflating: data/test.csv

inflating: data/train.csv df_train = pd.read_csv('data/train.csv')

df_test = pd.read_csv('data/test.csv')

sample_submission = pd.read_csv('data/sample_submission.csv')!rm -rf data

!rm nlp-getting-started.zip4. 분석

df_train.head()| id | keyword | location | text | target | |

|---|---|---|---|---|---|

| 0 | 1 | NaN | NaN | Our Deeds are the Reason of this #earthquake M... | 1 |

| 1 | 4 | NaN | NaN | Forest fire near La Ronge Sask. Canada | 1 |

| 2 | 5 | NaN | NaN | All residents asked to 'shelter in place' are ... | 1 |

| 3 | 6 | NaN | NaN | 13,000 people receive #wildfires evacuation or... | 1 |

| 4 | 7 | NaN | NaN | Just got sent this photo from Ruby #Alaska as ... | 1 |

df_test.head()| id | keyword | location | text | |

|---|---|---|---|---|

| 0 | 0 | NaN | NaN | Just happened a terrible car crash |

| 1 | 2 | NaN | NaN | Heard about #earthquake is different cities, s... |

| 2 | 3 | NaN | NaN | there is a forest fire at spot pond, geese are... |

| 3 | 9 | NaN | NaN | Apocalypse lighting. #Spokane #wildfires |

| 4 | 11 | NaN | NaN | Typhoon Soudelor kills 28 in China and Taiwan |

# step1 -- pass

# step2

predictr = MultiModalPredictor(label = 'target')

# step3

predictr.fit(df_train)

# step4

yhat = predictr.predict(df_test) No path specified. Models will be saved in: "AutogluonModels/ag-20231207_033914/"

AutoGluon infers your prediction problem is: 'binary' (because only two unique label-values observed).

2 unique label values: [1, 0]

If 'binary' is not the correct problem_type, please manually specify the problem_type parameter during predictor init (You may specify problem_type as one of: ['binary', 'multiclass', 'regression'])

Global seed set to 0

AutoMM starts to create your model. ✨

- AutoGluon version is 0.8.2.

- Pytorch version is 1.13.1.post200.

- Model will be saved to "/root/Dropbox/MP/AutogluonModels/ag-20231207_033914".

- Validation metric is "roc_auc".

- To track the learning progress, you can open a terminal and launch Tensorboard:

```shell

# Assume you have installed tensorboard

tensorboard --logdir /root/Dropbox/MP/AutogluonModels/ag-20231207_033914

```

Enjoy your coffee, and let AutoMM do the job ☕☕☕ Learn more at https://auto.gluon.ai

1 GPUs are detected, and 1 GPUs will be used.

- GPU 0 name: NVIDIA A100-SXM4-80GB MIG 7g.80gb

- GPU 0 memory: 84.37GB/85.20GB (Free/Total)

CUDA version is 11.2.

Using 16bit None Automatic Mixed Precision (AMP)

GPU available: True (cuda), used: True

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

You are using a CUDA device ('NVIDIA A100-SXM4-80GB MIG 7g.80gb') that has Tensor Cores. To properly utilize them, you should set `torch.set_float32_matmul_precision('medium' | 'high')` which will trade-off precision for performance. For more details, read https://pytorch.org/docs/stable/generated/torch.set_float32_matmul_precision.html#torch.set_float32_matmul_precision

LOCAL_RANK: 0 - CUDA_VISIBLE_DEVICES: [0]

| Name | Type | Params

----------------------------------------------------------

0 | model | MultimodalFusionMLP | 109 M

1 | validation_metric | BinaryAUROC | 0

2 | loss_func | CrossEntropyLoss | 0

----------------------------------------------------------

109 M Trainable params

0 Non-trainable params

109 M Total params

219.567 Total estimated model params size (MB)

Epoch 0, global step 26: 'val_roc_auc' reached 0.81683 (best 0.81683), saving model to '/root/Dropbox/MP/AutogluonModels/ag-20231207_033914/epoch=0-step=26.ckpt' as top 3

Epoch 0, global step 53: 'val_roc_auc' reached 0.88538 (best 0.88538), saving model to '/root/Dropbox/MP/AutogluonModels/ag-20231207_033914/epoch=0-step=53.ckpt' as top 3

Epoch 1, global step 80: 'val_roc_auc' reached 0.88780 (best 0.88780), saving model to '/root/Dropbox/MP/AutogluonModels/ag-20231207_033914/epoch=1-step=80.ckpt' as top 3

Epoch 1, global step 107: 'val_roc_auc' reached 0.87404 (best 0.88780), saving model to '/root/Dropbox/MP/AutogluonModels/ag-20231207_033914/epoch=1-step=107.ckpt' as top 3

Epoch 2, global step 134: 'val_roc_auc' reached 0.89212 (best 0.89212), saving model to '/root/Dropbox/MP/AutogluonModels/ag-20231207_033914/epoch=2-step=134.ckpt' as top 3

Epoch 2, global step 161: 'val_roc_auc' reached 0.89695 (best 0.89695), saving model to '/root/Dropbox/MP/AutogluonModels/ag-20231207_033914/epoch=2-step=161.ckpt' as top 3

Epoch 3, global step 188: 'val_roc_auc' reached 0.89258 (best 0.89695), saving model to '/root/Dropbox/MP/AutogluonModels/ag-20231207_033914/epoch=3-step=188.ckpt' as top 3

Epoch 3, global step 215: 'val_roc_auc' reached 0.89418 (best 0.89695), saving model to '/root/Dropbox/MP/AutogluonModels/ag-20231207_033914/epoch=3-step=215.ckpt' as top 3

Epoch 4, global step 242: 'val_roc_auc' was not in top 3

Epoch 4, global step 269: 'val_roc_auc' was not in top 3

Epoch 5, global step 296: 'val_roc_auc' was not in top 3

Epoch 5, global step 323: 'val_roc_auc' was not in top 3

Epoch 6, global step 350: 'val_roc_auc' was not in top 3

Epoch 6, global step 377: 'val_roc_auc' was not in top 3

Epoch 7, global step 404: 'val_roc_auc' was not in top 3

Epoch 7, global step 431: 'val_roc_auc' was not in top 3

Start to fuse 3 checkpoints via the greedy soup algorithm.

You are using a CUDA device ('NVIDIA A100-SXM4-80GB MIG 7g.80gb') that has Tensor Cores. To properly utilize them, you should set `torch.set_float32_matmul_precision('medium' | 'high')` which will trade-off precision for performance. For more details, read https://pytorch.org/docs/stable/generated/torch.set_float32_matmul_precision.html#torch.set_float32_matmul_precision

You are using a CUDA device ('NVIDIA A100-SXM4-80GB MIG 7g.80gb') that has Tensor Cores. To properly utilize them, you should set `torch.set_float32_matmul_precision('medium' | 'high')` which will trade-off precision for performance. For more details, read https://pytorch.org/docs/stable/generated/torch.set_float32_matmul_precision.html#torch.set_float32_matmul_precision

You are using a CUDA device ('NVIDIA A100-SXM4-80GB MIG 7g.80gb') that has Tensor Cores. To properly utilize them, you should set `torch.set_float32_matmul_precision('medium' | 'high')` which will trade-off precision for performance. For more details, read https://pytorch.org/docs/stable/generated/torch.set_float32_matmul_precision.html#torch.set_float32_matmul_precision

AutoMM has created your model 🎉🎉🎉

- To load the model, use the code below:

```python

from autogluon.multimodal import MultiModalPredictor

predictor = MultiModalPredictor.load("/root/Dropbox/MP/AutogluonModels/ag-20231207_033914")

```

- You can open a terminal and launch Tensorboard to visualize the training log:

```shell

# Assume you have installed tensorboard

tensorboard --logdir /root/Dropbox/MP/AutogluonModels/ag-20231207_033914

```

- If you are not satisfied with the model, try to increase the training time,

adjust the hyperparameters (https://auto.gluon.ai/stable/tutorials/multimodal/advanced_topics/customization.html),

or post issues on GitHub: https://github.com/autogluon/autogluon

You are using a CUDA device ('NVIDIA A100-SXM4-80GB MIG 7g.80gb') that has Tensor Cores. To properly utilize them, you should set `torch.set_float32_matmul_precision('medium' | 'high')` which will trade-off precision for performance. For more details, read https://pytorch.org/docs/stable/generated/torch.set_float32_matmul_precision.html#torch.set_float32_matmul_precision5. 제출

sample_submission| id | target | |

|---|---|---|

| 0 | 0 | 0 |

| 1 | 2 | 0 |

| 2 | 3 | 0 |

| 3 | 9 | 0 |

| 4 | 11 | 0 |

| ... | ... | ... |

| 3258 | 10861 | 0 |

| 3259 | 10865 | 0 |

| 3260 | 10868 | 0 |

| 3261 | 10874 | 0 |

| 3262 | 10875 | 0 |

3263 rows × 2 columns

sample_submission['target'] = yhat

sample_submission.to_csv("submission.csv",index=False)!kaggle competitions submit -c nlp-getting-started -f submission.csv -m "오토글루온, MultiModalPredictor"Warning: Your Kaggle API key is readable by other users on this system! To fix this, you can run 'chmod 600 /root/.kaggle/kaggle.json'

100%|██████████████████████████████████████| 22.2k/22.2k [00:01<00:00, 12.4kB/s]

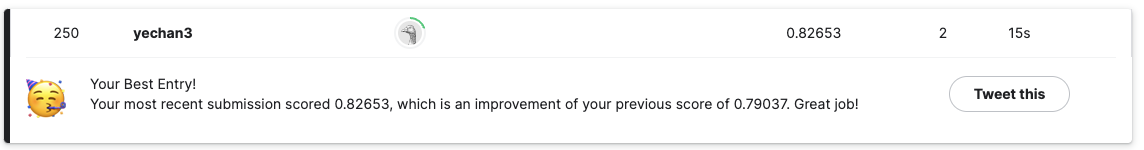

Successfully submitted to Natural Language Processing with Disaster Tweets

250/10940.22851919561243145이정도가 합리적임