#!pip install autogluon.eda13wk-50: 아이스크림(이상치) / 자료분석(Autogluon)

1. 강의영상

2. Imports

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

#---#"

from autogluon.tabular import TabularPredictor

import autogluon.eda.auto as auto

#---#

import warnings

warnings.filterwarnings('ignore')3. Data

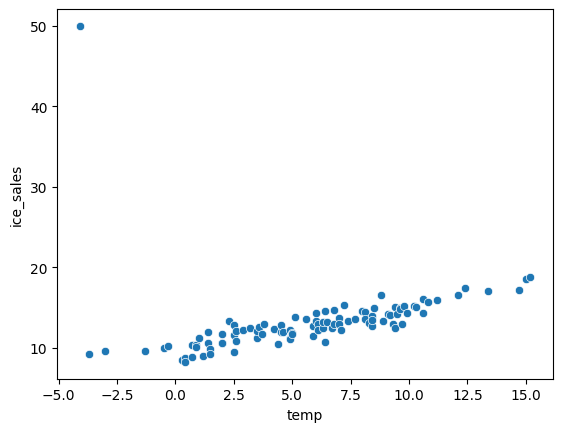

np.random.seed(43052)

temp = pd.read_csv('https://raw.githubusercontent.com/guebin/DV2022/master/posts/temp.csv').iloc[:100,3].to_numpy()

temp.sort()

ice_sales = 10 + temp * 0.5 + np.random.randn(100)

ice_sales[0] = 50

df_train = pd.DataFrame({'temp':temp,'ice_sales':ice_sales})

df_train| temp | ice_sales | |

|---|---|---|

| 0 | -4.1 | 50.000000 |

| 1 | -3.7 | 9.234175 |

| 2 | -3.0 | 9.642778 |

| 3 | -1.3 | 9.657894 |

| 4 | -0.5 | 9.987787 |

| ... | ... | ... |

| 95 | 12.4 | 17.508688 |

| 96 | 13.4 | 17.105376 |

| 97 | 14.7 | 17.164930 |

| 98 | 15.0 | 18.555388 |

| 99 | 15.2 | 18.787014 |

100 rows × 2 columns

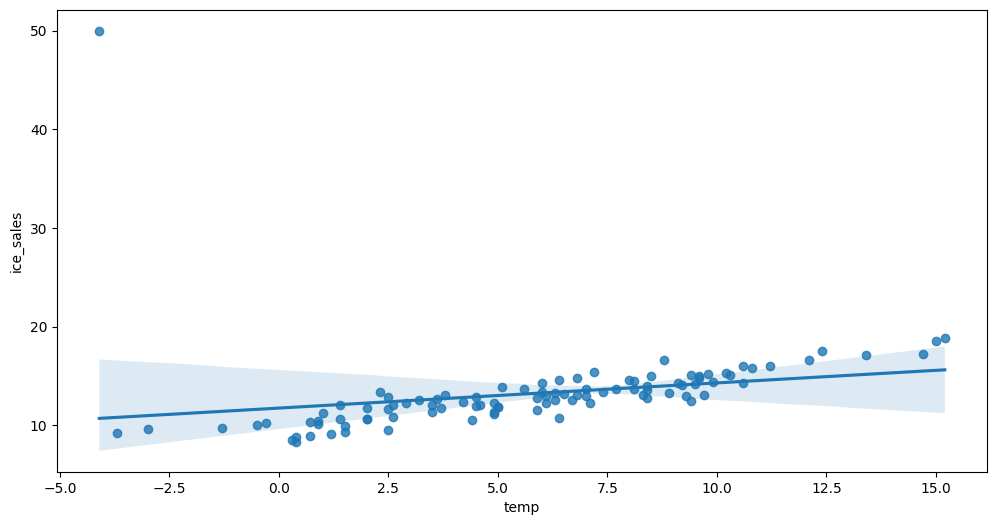

sns.scatterplot(df_train,x='temp',y='ice_sales')

4. 적합

# step1 -- pass

# step2

predictr = TabularPredictor(label='ice_sales')

# step3

predictr.fit(df_train)

# step4

yhat = predictr.predict(df_train)No path specified. Models will be saved in: "AutogluonModels/ag-20231201_104555/"

Beginning AutoGluon training ...

AutoGluon will save models to "AutogluonModels/ag-20231201_104555/"

AutoGluon Version: 0.8.2

Python Version: 3.10.13

Operating System: Linux

Platform Machine: x86_64

Platform Version: #26~22.04.1-Ubuntu SMP PREEMPT_DYNAMIC Thu Jul 13 16:27:29 UTC 2

Disk Space Avail: 248.42 GB / 490.57 GB (50.6%)

Train Data Rows: 100

Train Data Columns: 1

Label Column: ice_sales

Preprocessing data ...

AutoGluon infers your prediction problem is: 'regression' (because dtype of label-column == float and many unique label-values observed).

Label info (max, min, mean, stddev): (50.0, 8.273155164108418, 13.17881, 4.33788)

If 'regression' is not the correct problem_type, please manually specify the problem_type parameter during predictor init (You may specify problem_type as one of: ['binary', 'multiclass', 'regression'])

Using Feature Generators to preprocess the data ...

Fitting AutoMLPipelineFeatureGenerator...

Available Memory: 126799.58 MB

Train Data (Original) Memory Usage: 0.0 MB (0.0% of available memory)

Inferring data type of each feature based on column values. Set feature_metadata_in to manually specify special dtypes of the features.

Stage 1 Generators:

Fitting AsTypeFeatureGenerator...

Stage 2 Generators:

Fitting FillNaFeatureGenerator...

Stage 3 Generators:

Fitting IdentityFeatureGenerator...

Stage 4 Generators:

Fitting DropUniqueFeatureGenerator...

Stage 5 Generators:

Fitting DropDuplicatesFeatureGenerator...

Types of features in original data (raw dtype, special dtypes):

('float', []) : 1 | ['temp']

Types of features in processed data (raw dtype, special dtypes):

('float', []) : 1 | ['temp']

0.0s = Fit runtime

1 features in original data used to generate 1 features in processed data.

Train Data (Processed) Memory Usage: 0.0 MB (0.0% of available memory)

Data preprocessing and feature engineering runtime = 0.02s ...

AutoGluon will gauge predictive performance using evaluation metric: 'root_mean_squared_error'

This metric's sign has been flipped to adhere to being higher_is_better. The metric score can be multiplied by -1 to get the metric value.

To change this, specify the eval_metric parameter of Predictor()

Automatically generating train/validation split with holdout_frac=0.2, Train Rows: 80, Val Rows: 20

User-specified model hyperparameters to be fit:

{

'NN_TORCH': {},

'GBM': [{'extra_trees': True, 'ag_args': {'name_suffix': 'XT'}}, {}, 'GBMLarge'],

'CAT': {},

'XGB': {},

'FASTAI': {},

'RF': [{'criterion': 'gini', 'ag_args': {'name_suffix': 'Gini', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'entropy', 'ag_args': {'name_suffix': 'Entr', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'squared_error', 'ag_args': {'name_suffix': 'MSE', 'problem_types': ['regression', 'quantile']}}],

'XT': [{'criterion': 'gini', 'ag_args': {'name_suffix': 'Gini', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'entropy', 'ag_args': {'name_suffix': 'Entr', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'squared_error', 'ag_args': {'name_suffix': 'MSE', 'problem_types': ['regression', 'quantile']}}],

'KNN': [{'weights': 'uniform', 'ag_args': {'name_suffix': 'Unif'}}, {'weights': 'distance', 'ag_args': {'name_suffix': 'Dist'}}],

}

Fitting 11 L1 models ...

Fitting model: KNeighborsUnif ...

-2.2756 = Validation score (-root_mean_squared_error)

0.01s = Training runtime

0.01s = Validation runtime

Fitting model: KNeighborsDist ...

-2.6883 = Validation score (-root_mean_squared_error)

0.01s = Training runtime

0.01s = Validation runtime

Fitting model: LightGBMXT ...

-12.8059 = Validation score (-root_mean_squared_error)

0.47s = Training runtime

0.0s = Validation runtime

Fitting model: LightGBM ...

-1.6843 = Validation score (-root_mean_squared_error)

0.17s = Training runtime

0.0s = Validation runtime

Fitting model: RandomForestMSE ...

-2.562 = Validation score (-root_mean_squared_error)

0.27s = Training runtime

0.02s = Validation runtime

Fitting model: CatBoost ...

-1.2192 = Validation score (-root_mean_squared_error)

0.25s = Training runtime

0.0s = Validation runtime

Fitting model: ExtraTreesMSE ...

-1.8631 = Validation score (-root_mean_squared_error)

0.29s = Training runtime

0.1s = Validation runtime

Fitting model: NeuralNetFastAI ...

-1.752 = Validation score (-root_mean_squared_error)

0.64s = Training runtime

0.01s = Validation runtime

Fitting model: XGBoost ...

-1.3017 = Validation score (-root_mean_squared_error)

0.1s = Training runtime

0.0s = Validation runtime

Fitting model: NeuralNetTorch ...

-1.1863 = Validation score (-root_mean_squared_error)

0.84s = Training runtime

0.01s = Validation runtime

Fitting model: LightGBMLarge ...

-2.0798 = Validation score (-root_mean_squared_error)

0.19s = Training runtime

0.0s = Validation runtime

Fitting model: WeightedEnsemble_L2 ...

-1.1737 = Validation score (-root_mean_squared_error)

0.22s = Training runtime

0.0s = Validation runtime

AutoGluon training complete, total runtime = 3.75s ... Best model: "WeightedEnsemble_L2"

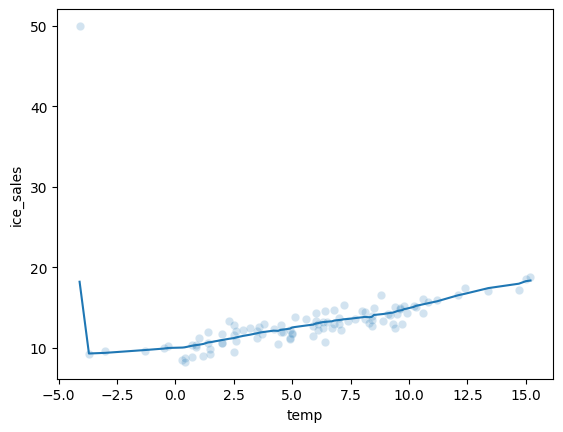

TabularPredictor saved. To load, use: predictor = TabularPredictor.load("AutogluonModels/ag-20231201_104555/")[1000] valid_set's rmse: 1.69432sns.scatterplot(df_train,x='temp',y='ice_sales',alpha=0.2)

sns.lineplot(df_train,x='temp',y=yhat)

5. 해석 및 시각화

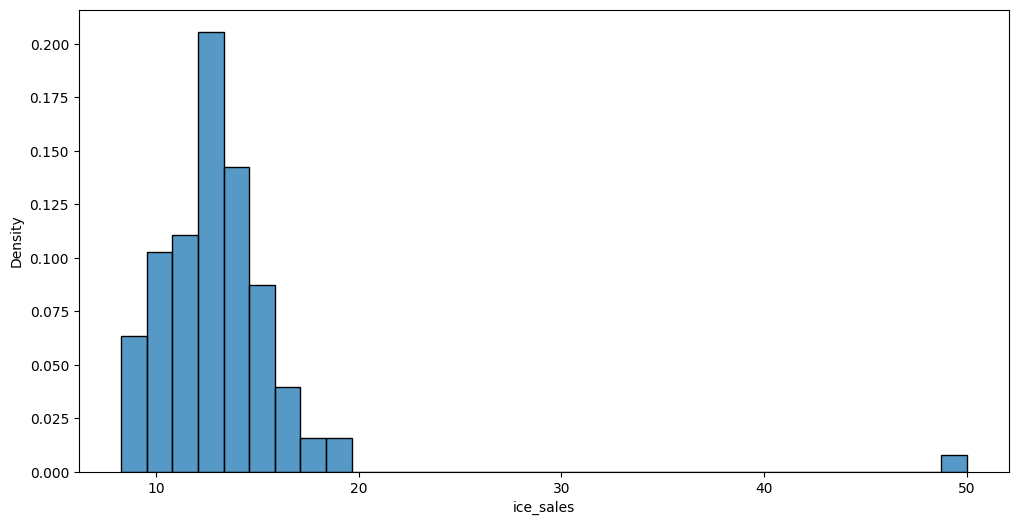

A. y의 분포, (X,y)의 관계 시각화

auto.target_analysis(

train_data=df_train,

label='ice_sales',

fit_distributions=False

)Target variable analysis

| count | mean | std | min | 25% | 50% | 75% | max | dtypes | unique | missing_count | missing_ratio | raw_type | special_types | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ice_sales | 100 | 13.178805 | 4.337878 | 8.273155 | 11.296645 | 12.856589 | 14.294614 | 50.0 | float64 | 100 | float |

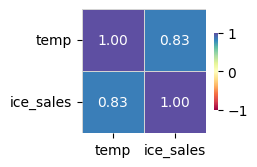

Target variable correlations

train_data - spearman correlation matrix; focus: absolute correlation for ice_sales >= 0.5

Feature interaction between temp/ice_sales in train_data

B. 중요한 설명변수?

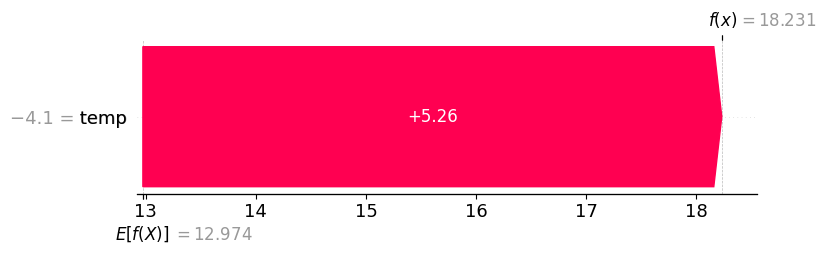

pass # 설명변수가 하나라서..C. 관측치별 해석

df_train.iloc[[0]]| temp | ice_sales | |

|---|---|---|

| 0 | -4.1 | 50.0 |

predictr.predict(df_train.iloc[[0]])0 18.230947

Name: ice_sales, dtype: float32auto.explain_rows(

train_data=df_train,

model=predictr,

rows=df_train.iloc[[0]],

display_rows= True,

plot='waterfall'

)

| temp | ice_sales | |

|---|---|---|

| 0 | -4.1 | 50.0 |